An AI Proof of Concept (PoC) can be a crucial step in your AI journey, yet many encounter challenges. By some estimates, more than 80% of AI projects never make it to production1. While that figure might look a little frightening, let’s take a different perspective: PoC failures are essential.

We believe that failure is where real progress starts. Each breakdown exposes the gaps in readiness, strategy, or alignment. The key is to learn from failure, adapt your approach, and use that insight to increase your chances of success next time.

In this article, we’ve investigated what might have gone wrong in a failed PoC and how you can do things differently next time to increase your chances of success.

Defining the AI Proof of Concept

PoC is an acronym for Proof of Concept, which is a small-scale project designed to test whether, in this case, an AI solution is technically feasible and capable of delivering value before it's fully developed and deployed in a real-world business environment. The goal of a PoC is to demonstrate that a proposed AI model, algorithm, or approach can solve a specific problem or improve a particular process.

In business terms, an AI PoC helps stakeholders assess the potential return on investment (ROI), uncover technical risks early, and align AI capabilities with strategic goals. It serves as a critical checkpoint before committing significant resources to a full-scale AI deployment, and is a common step in innovation cycles across industries, especially when implementing emerging technologies like AI.

Why do AI Proof of Concepts fail?

-

Selecting the right AI use case

Too often, we see people grasp onto AI as the solution to a problem they can’t articulate. However, a successful AI initiative must begin with problem framing, not technology exploration. It could be that AI is already being used by your people without you realising. “Shadow AI”, as it’s known, is common when a company doesn’t have a clear AI policy and people try to solve a problem they’re facing. Sometimes the use case that has been selected is too hard or too small, and there is no path to scale. The use case can occasionally be misaligned with the business’s constraints, such as time, data, or regulation.

Use case selection should be based on:

- Business value

- Feasibility

- Data availability

- Ethical and regulatory risk

Look at the real-life problem you’re facing and decide if AI is the right solution. The truth is, AI might not be the answer. The right use case will expose itself if you work in this way.

-

Assessing business readiness for AI

Even the best algorithms fail in the wrong environment. Readiness is about more than tech - you also need to consider culture, compliance, and data. Break down readiness into:

- Data maturity and tech debt: If your data is unstructured, siloed, or low quality, your AI can’t perform.

- Governance and compliance: If the business says you can’t use AI, or your industry has tight restrictions, you're blocked before you begin.

- Change mindset: AI initiatives often fail because employees don’t trust, understand, or want to use them.

According to the Ataccama Data Trust Report 2025, 68% of Chief Data Officers named poor data quality as their top challenge, and often the key reason AI projects stall. Without solid foundations, no model, no matter how advanced, can deliver.

-

You weren’t ambitious enough

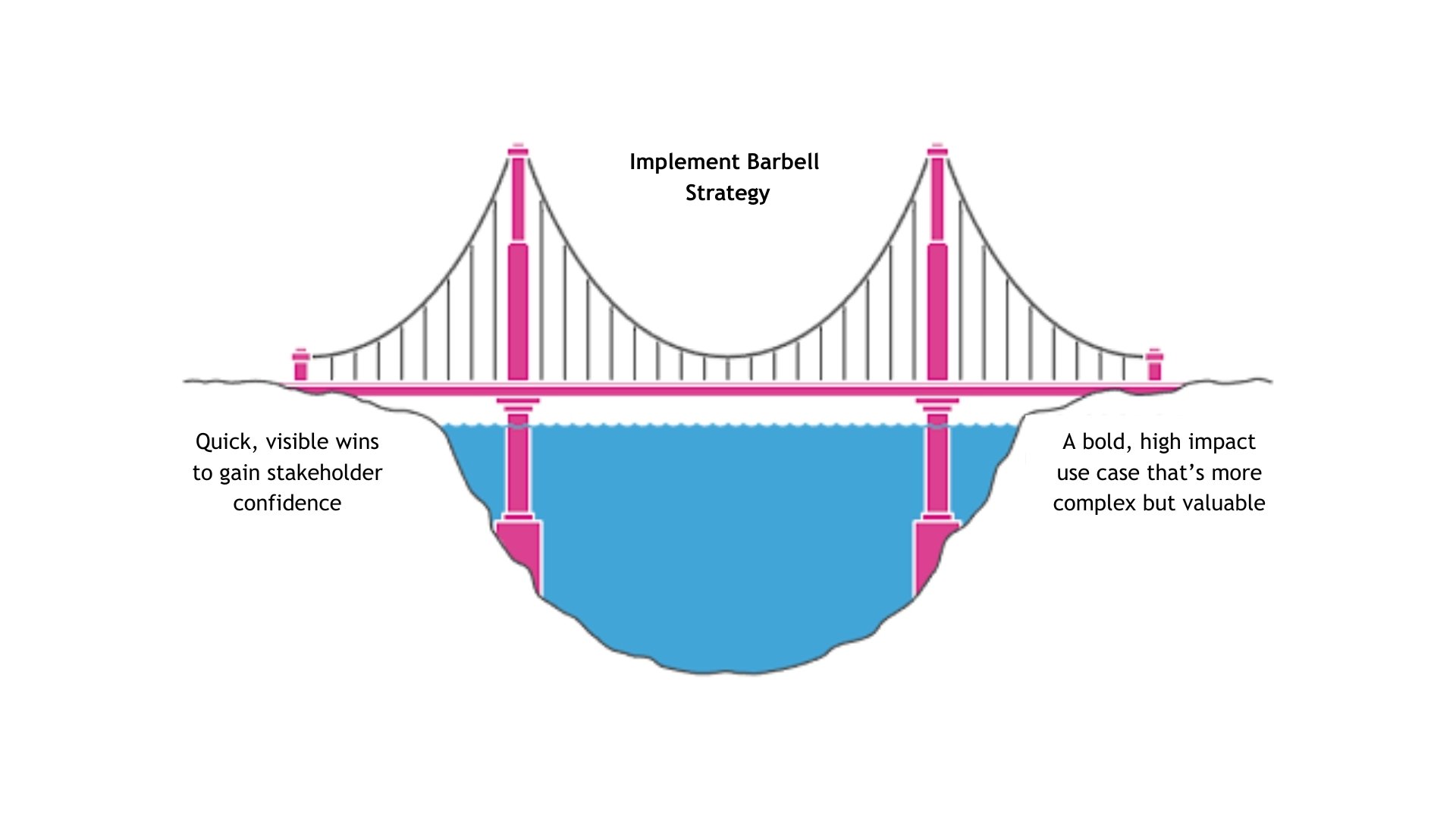

Many PoCs aim for “quick wins,” thinking low complexity equals low risk. But in AI, low complexity usually means low value. This might seem counterintuitive, but automating a process no one cares about is a waste of time. Instead, use a barbell strategy, which is a decision-making and risk management approach that balances two extremes: one very safe and low-risk, and the other high-risk but high-reward, while deliberately avoiding the middle ground.

It originates from finance, but is now widely applied to business strategy, innovation, and AI project portfolios.

The diagram below illustrates the barbell strategy.

- On one end: Quick, visible wins to gain stakeholder confidence.

- On the other end: A bold, high-impact use case that’s harder but meaningful.

If your PoC failed because it wasn’t ambitious enough, you might have missed the opportunity to show real value.

How to overcome a lack of ambition in AI PoCs

-

You didn’t define success

Many failed PoCs weren’t actually failures; they just didn’t have a success metric. You can’t hit a target you never defined, so make sure you know what you’re trying to achieve - is it cost savings, accuracy, adoption or something else?

Steps to create a successful AI PoC

- Run a retrospective

Bring together everyone who touched the PoC - developers, SMEs, users, and sponsors. Run a blameless post-mortem to find out what worked, what didn’t, and why. These learnings will be invaluable, and the cross-functional insight will help avoid blind spots next time.

- Set clear success criteria

Define your validation metrics so that you know what good looks like. This involves choosing how you'll measure the performance of your model (or system) during the validation phase - perhaps it’s a 10% increase in accuracy or a 15-minute reduction in response time. It’s an important step before testing it in the real world or using it in production. To get it right, you really need to know your problem and understand your goals and then pick metrics that reflect your priorities.

Re-engage senior leaders with your learnings and outline how the next attempt is different. Show them you’re learning from failure, not repeating it. Their support unlocks resources, funding, and organizational will. Then set time-bound, measurable, and realistic goals. We recommend a 90-day or less PoC cycle. Fast iterations help you learn quickly and pivot if needed.

- Understand your constraints

Map your environment before you build again. What data do you actually have? What legal frameworks apply? Do you have enough resources to build and test in a realistic timeframe? Understand your parameters and design within them.

- Prioritize use cases intelligently

Ask yourself what is causing pain right now? Only then ask if AI is the right tool, and then go back to the complexity vs. impact matrix:

- High impact, high complexity - your moonshot.

- Low complexity, high impact - your quick win.

Sometimes you might want to break it down into some quicker wins, but on the whole, the value is in the high-impact, high-complexity projects, so that's where you should aim for.

- Build, iterate, repeat

Don’t wait for perfection: learn and adapt. Use agile principles because this is how AI gets better. Every failed PoC gives you clearer data, sharper hypotheses, and a better map.

Turning AI PoC failure into future success

AI PoCs will fail, but what matters is how you respond.

When handled well, a failed PoC can become your most valuable asset; it reveals what your business truly needs, exposes the hidden blockers, and helps you reframe the path to success.

At its best, AI is about decision intelligence and unlocking new ways of working. To get there, learn from what didn’t work and set bold but realistic goals - your next PoC must be tightly scoped, business-led, and designed with a path to scale. Remember: failure is the foundation for real, sustainable transformation.

If you need help building your AI roadmap, talk to us. We have experience in helping businesses turn failed experiments into success stories.

.png?width=200&name=Untitled%20design%20(4).png)